Tips for abstract screening in a systematic review.

The search is over

Congratulations, your search for studies is complete. You have followed the process specified in your protocol and reached an important milestone in your systematic review. You now have hundreds, or perhaps thousands, of references and a review team eager to take a closer look. So what’s next?

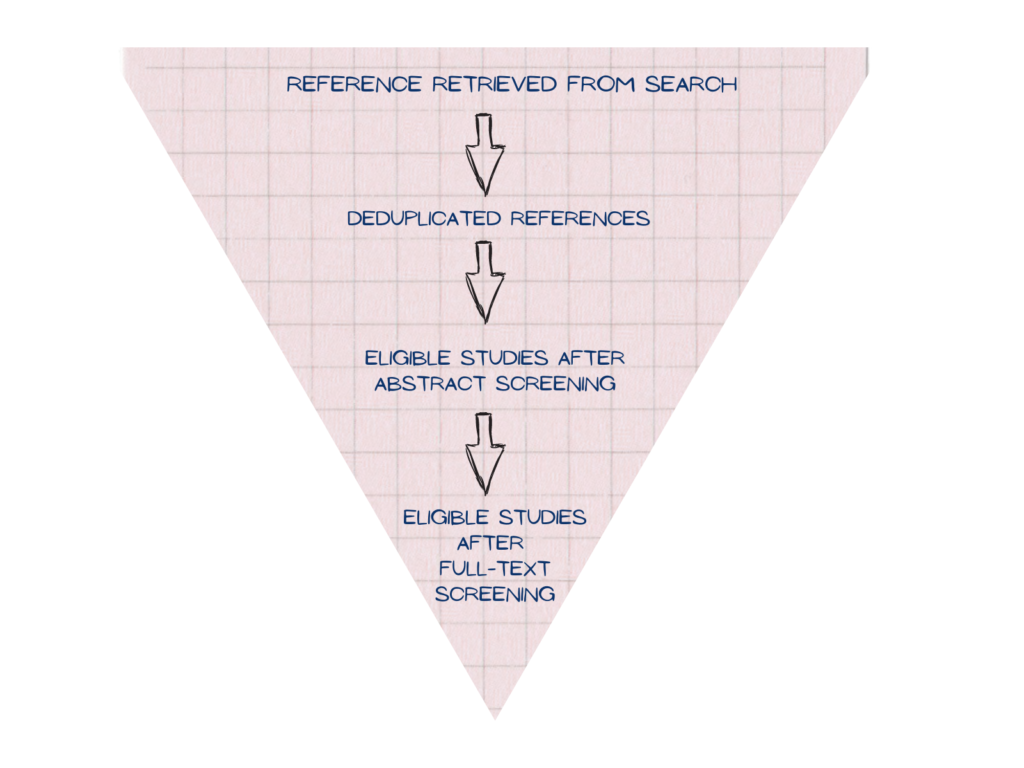

The number of references you retrieve from a search can be daunting. In the process of manually selecting some of these studies for further investigation your team will make important decisions and any of these can introduce bias. Good study selection requires a rigorous process and the first stage in that process is abstract screening.

At the abstract screening stage you will keep the references that meet the inclusion criteria for your review and those that you are unsure about. You will dismiss the rest. The references you keep will move to the next stage in the selection process: full-text screening.

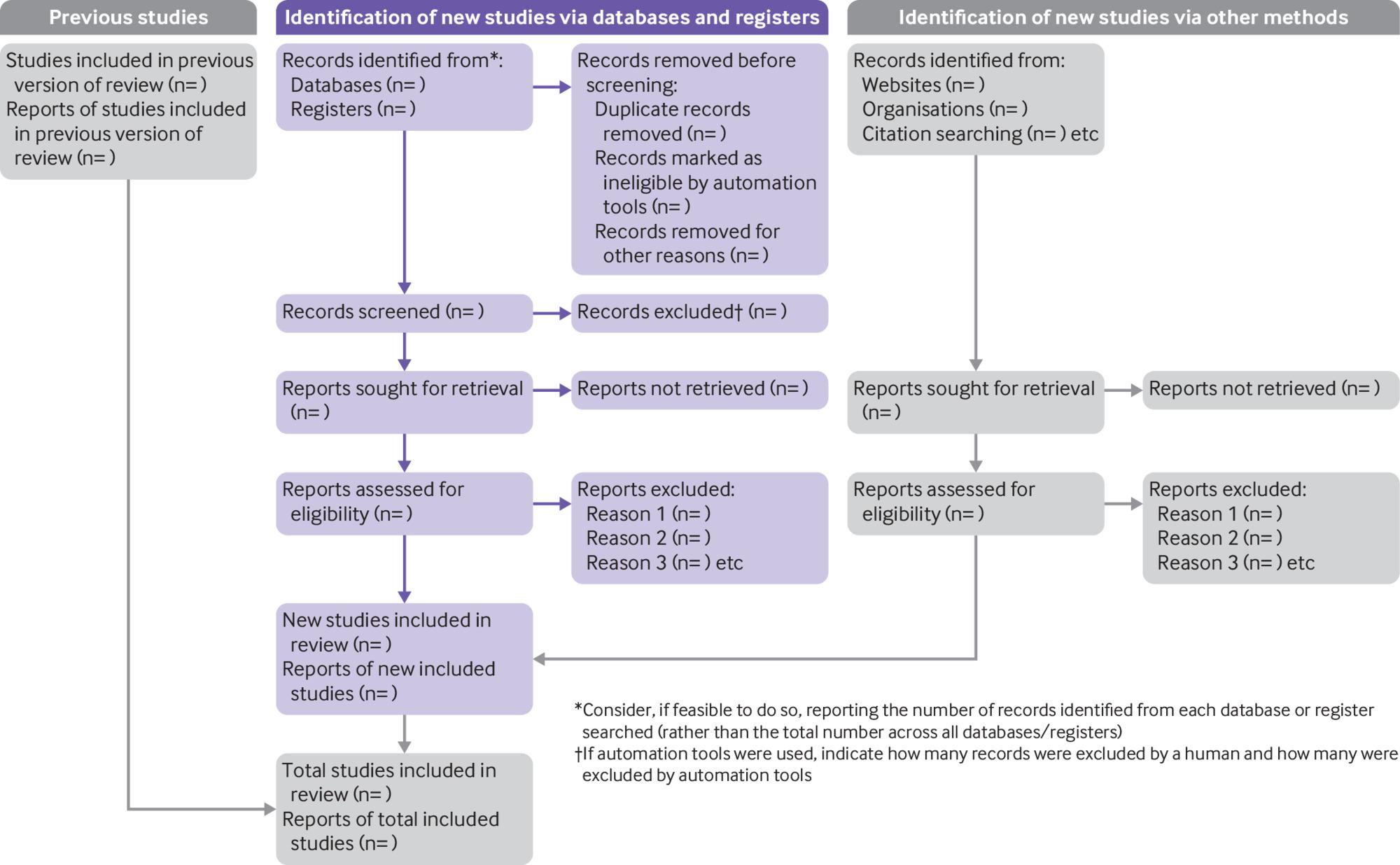

Abstract screening presents an opportunity to save time in the study selection process because reports correctly excluded here will reduce the amount of full-text screening needed later. It is worth taking the time to plan abstract screening carefully and to think about it in the context of the flow of information through your review, from the identification of references, through the screening and eligibility checks, to the eventual exclusion or inclusion of studies in the analysis (see figure 2)¹. These guidelines will help you to develop and implement an abstract screening process that is straightforward and reliable.

Four stages of abstract screening

It’s useful to consider four key stages of abstract screening:

- Plan – the process begins with organising the references, preparing screening questions and briefing the review team.

- Pilot – the screening process is pilot-tested on a small number of references, and adjusted on the basis of reviewer feedback.

- Screen – the eligibility of each reference is assessed, screening decisions are monitored, and disagreements between reviewers are resolved.

- Log – information is recorded for reporting and future reference in a manner that allows easy access and data sharing.

Let’s consider each stage in detail.

1. Plan

Before the screening begins it’s important to organise and store the references efficiently; for example, in a reference management software, or Covidence. Your choice of tool will depend on factors such as the type of systematic review and the number of references. These guidelines are for systematic reviews that have identified 1000 or more references.

Preparing to screen involves formulating questions based on the review’s prespecified eligibility criteria. The questions should be as simple and unequivocal as possible. It’s a good idea to sort questions in order of importance so that a reference can be excluded as soon as it fails to meet a criterion, for example:

- Was the study conducted in the USA?

- Was the study conducted in an elementary school?

- Was some or all of the study population female?

In this way, reviewers can then go straight to the next report without needing to complete the remaining questions. A trained librarian or information specialist will be able to advise you on screening questions and provide expert guidance based on experience.Once agreed, the team can document the review’s eligibility criteria in the Review Settings section in Covidence for display above the abstracts for easy reference during screening.

At the planning stage you will also allocate the task of screening to two or more members of your team. Using two or more reviewers, who must work independently, provides a check on decisions and reduces the risk that a study is included or excluded incorrectly. Conflicting decisions (one reviewer wants to include a study, another wants to exclude it) take time to resolve. Effort invested in the planning phase – for example on a thorough team briefing – will repay you in efficiencies down the line. We will return to the issue of conflict resolution during stages two, three, and four.

2. Pilot

It’s impossible to foresee all the potential complications that your team could encounter during screening. Pilot-testing a small set of references (Cochrane recommends six to eight including a definite yes, a definite no, and a maybe) will uncover some of them. Perhaps an ambiguous question or a gap in communication has caused a reviewer to reach an incorrect decision on the eligibility of some studies. This creates a conflict that needs to be investigated. Issues like this one can be identified and effectively addressed after the pilot-test and before the screening is scaled up to the whole reference set.

3. Screen

Before beginning large-scale screening, review teams may carry out automated deduplication processes available in reference management software, and Covidence automatically deduplicates all references imported into review projects.

Reviewers may also identify duplicate references during the screening process; these are two or more identical references that might be the result of searching multiple databases. Review teams can agree a process for managing these as part of the planning process.

As teams work through a set of references it’s also common to find multiple published reports of the same study. Apply the eligibility criteria to each one. Later in the review process you will collate multiple reports of the same study (so as not to count the same data several times). But for now, you must not discard any references whose full text could contain information to help to determine study eligibility later on.

During the first stage of screening, Title & Abstract Screening, reviewers will be asked to vote on whether a study is eligible for inclusion by selecting ‘yes’ (the study is eligible), ‘no’ (the study is ineligible), or ‘maybe’ (eligibility is unclear). In most cases, reviews will be configured so that two reviewers are voting independently on each reference. If both reviewers vote ‘yes’ or ‘maybe’, the reference will be moved to Full Text Review, the second stage of screening. If both reviewers vote ‘no’, the reference will be moved to ‘Irrelevant’ and removed from further consideration by the review team.

If the reviewers vote in conflict with each other – one ‘Yes’ or ‘Maybe’ vote and one ‘No’ vote – the reference will be moved to the ‘Resolve conflicts’ list for the team’s agreed conflict resolution process to be implemented. Good planning and pilot-testing can help to keep disagreement between reviewers to a minimum but will not remove it entirely. Disagreement can usually be resolved by discussion until the reviewers reach consensus. If that is not possible, the disagreement should be referred to another member of the team to make the final decision.

Using a review project management tool such as Covidence, that facilitates collaboration by creating rules, assigning roles, and setting notifications, can save time here. A lead reviewer needs the oversight to monitor the decision data as it is produced and the agility to solve problems as they arise. All reviewers need easy collaboration and fast feedback to maintain their engagement and motivation during a process that is necessarily repetitive and risks becoming tedious if it is not managed well.

4. Log

To ensure transparency and standardised reporting, the study selection process must be documented in the review. It is advisable to keep detailed records in parallel with the screening activity itself, starting with the number of studies retrieved by the search. The PRISMA checklist for the content of a systematic review makes this requirement for the methods section of a review: ‘Specify the methods used to decide whether a study met the inclusion criteria of the review, including how many reviewers screened each record and each report retrieved, whether they worked independently, and if applicable, details of automation tools used in the process.’ PRISMA also provides a flow diagram generator that produces a chart similar to the one shown in figure 2.

You may wish to retain data about the screening process that is not included in the review itself, either for your own reference, or to provide on request to other researchers. For this reason, any data that you archive should be well-organised and easily accessible.

Once the abstract screening process is complete, you can calculate the level of agreement among the reviewers (the interrater reliability), for example using Cohen’s kappa. Interrater reliability statistics can be used to catch ‘coder drift’, the tendency for reviewers to deviate from the process as it becomes more familiar to them. Further training of reviewers helps to improve

Conclusion

Abstract screening is a simple process that requires a disciplined and consistent approach. It is also one of the most time-consuming parts of the systematic review process and every effort to minimise the risk of bias must be taken. Planning and refining a robust screening process will help make the screening itself as effective as possible. Monitoring the data during screening will enable early identification of problems and supports the continued smooth running of the review process.

1. Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. doi: 10.1136/bmj.n71